Telepresence Release Notes

Version 2.20.2 (January 23, 2025)

Upgraded Golang to 1.23.5

Telepresence now uses the latest version of Golang. This update resolves CVE-2024-45341 and CVE-2024-45336.

Version 2.20.1 (December 06, 2024)

Fixed an issue where Telepresence wouldn't create a cache directory

When installing Telepresence 2.20 and running

telepresence login for the first time, Telepresence didn't create a cache directory. This resulted in an error until the user manually created it. Now, Telepresence automatically creates the directory during a new installation.

Version 2.20.0 (November 15, 2024)

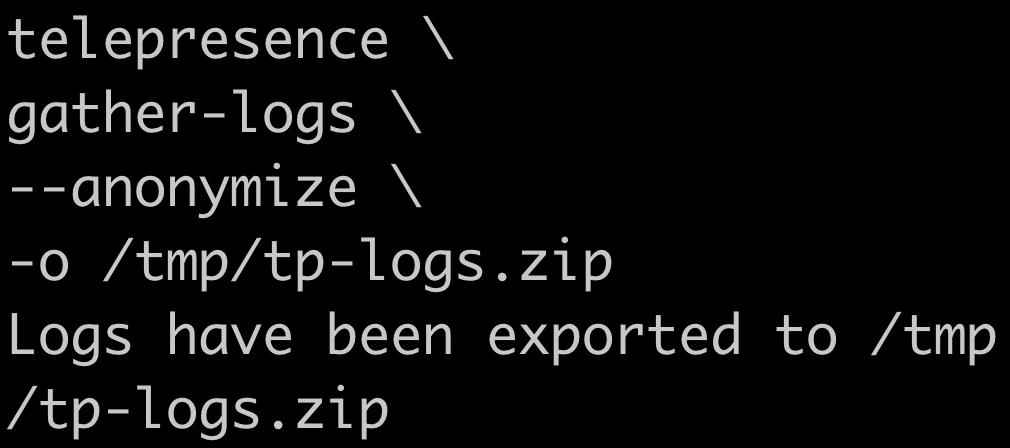

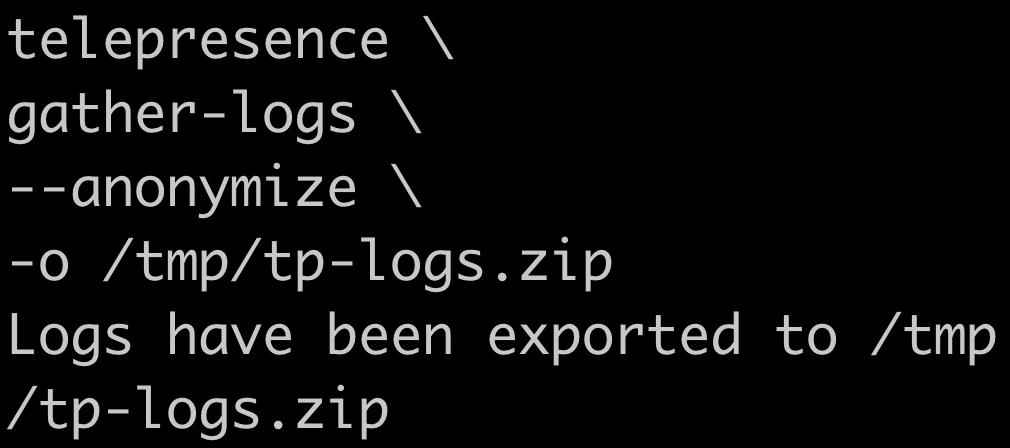

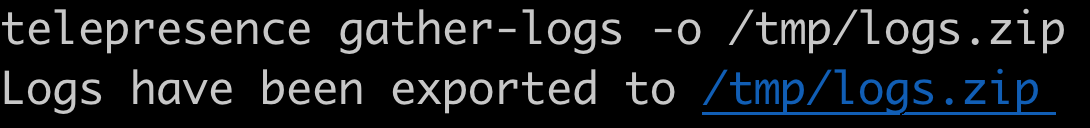

Added a timestamp for the telepresence_logs.zip filename

The timestamp has now been added to telepresence_logs.

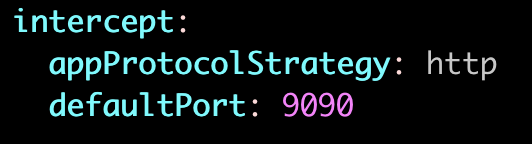

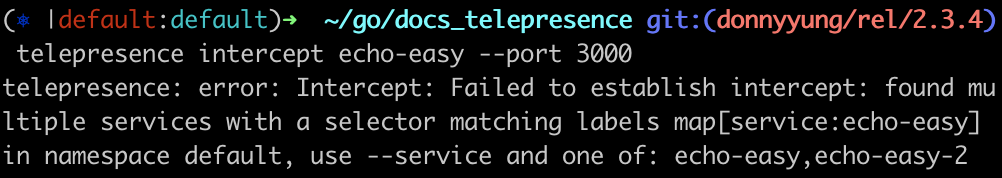

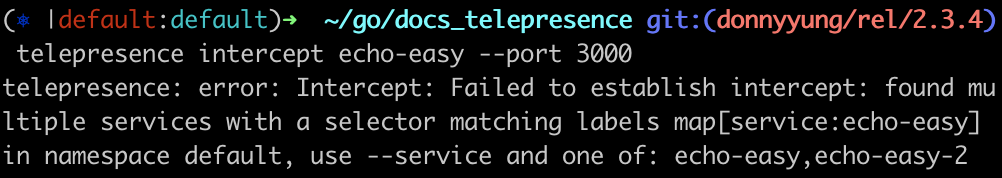

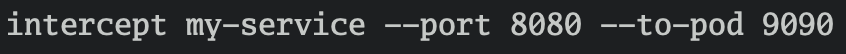

Enabled intercepts of workloads that don’t have a service

Telepresence can now intercept workloads that don’t have an associated service. The intercept will then target a container port instead of a service port. You can enable the new behavior by adding a

telepresence.getambassador.io/inject-container-ports annotation, where the value is a comma-separated list of port identifiers consisting of either the name or the port number of a container port (optionally suffixed with /TCP or /UDP). For more information, see Intercepting without a service.Publish the OSS version of the telepresence Helm chart

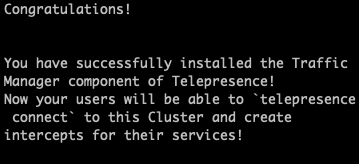

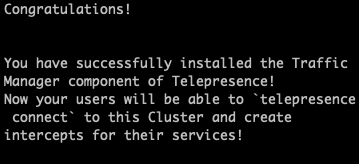

The OSS version of the telepresence helm chart is now available at ghcr.io/telepresenceio/telepresence-oss, and can be installed using the command:

helm install traffic-manager oci://ghcr.io/telepresenceio/telepresence-oss --namespace ambassador --version 2.20.0 The chart documentation is published at ArtifactHUB.Added syntax control for the environment file created with the intercept flag --env-file

You can now use

--env-syntax to allow control over the syntax of the file created using the intercept flag --env-file . Valid syntaxes include docker, compose, sh, csh, cmd, and ps. You can use the export suffix with the following syntaxes: sh, csh, and ps. For more information, see Environment variables.Added Argo Rollouts support for workloads

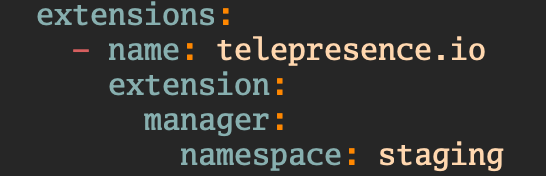

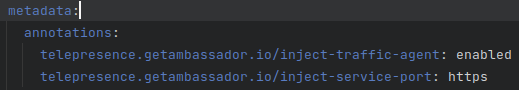

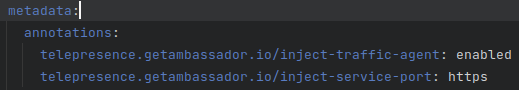

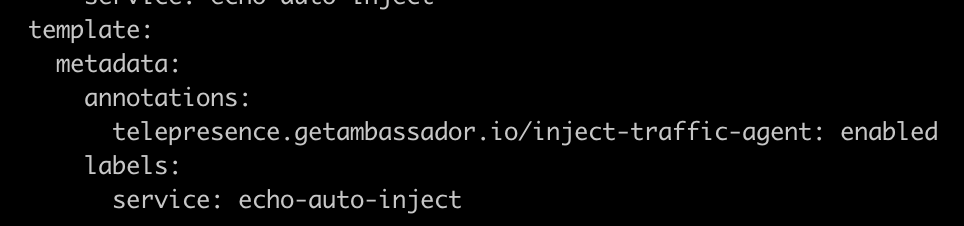

You can now opt-in for support for workloads using Argo Rollouts. The behavior is controlled by the

workloads.argoRollouts.enabled Helm chart value. We recommend setting the following annotation to avoid creating unwanted versions: telepresence.getambassador.io/inject-traffic-agent: enabled to avoid creation of unwanted revisions. For more information, see Enable Argo Rollouts.Added --create-namespace flag to the telepresence helm install command

You can now use the

--create-namespace (default is true) flag with the telepresence helm install command. If it’s explicitly set to false, no attempt will be made to create a namespace for the Traffic Manager. If the namespace is missing, the command will fail. For more information, see Install into custom namespace.Introduced DNS fallback on Windows

A

Note: When set to

network.defaultDNSWithFallback config option is now available on Windows. It causes the DNS resolver to fall back to the resolver that was first in the list prior to Telepresence establishing a connection. The default is true because it provides the best experience. For backwards compatibility, set to false. Note: When set to

false, there can be issues when Telepresence connects because utilities that use nslookup or node.resolveXXX to look up addresses will only attempt to find the first server in the list.

Brew now supports MacOS (amd64/arm64) / Linux (amd64)

The brew formula can now dynamically support MacOS (amd64/arm64) / Linux (amd64) in a single formula

Added the ability to provide an externally provisioned webhook secret

Added

supplied as a new option for agentInjector.certificate.method. This fully disables the generation of the mutating webhook's secret, allowing the chart to use the values of a pre-existing secret named agentInjector.secret.name. Previously, the install would fail when it attempted to create or update the externally managed secret. For more information, see Customizing the Traffic Manager.Added support to allow a PTR query for a DNS server to return the cluster domain

The

nslookup program on Windows uses a DNS pointer record (PTR) query to retrieve its displayed Server property. This Telepresence DNS resolver will now return the cluster domain on this type of query.Added schedulerName to PodTemplate

You can now use a Helm chart value called

schedulerName. With this feature, you can define particular schedulers from Kubernetes to allocate Telepresence resources, including the Traffic Manager and hook Pods. For more information, see Customizing the Traffic Manager.The Ambassador Agent is no longer enabled by default

The Ambassador agent service, which is used for collecting information about services in the cluster and sending them to Ambassador Cloud, is no longer enabled by default.

Use nftables instead of iptables-legacy

We introduced

iptables-legacy because there was an issue using Telepresence with Fly.io, where nftables wasn't supported by the kernel. Fly.io fixed this issue, so Telepresence now uses nftables, again. This ensures that iptables-legacy will work for modern systems that lack support.Fixed an issue where the --http-path-regexp intercept flag yielded an illegal argument error

When using the

--http-path-regexp intercept flag, the subsequent flag sent to the Traffic Agent was incorrect and resulted in an error about a --path-regexp flag. Now, the flag is passed correctly as --path-regex to Traffic Agent instead of --path-regexp.Fixed an issue that caused repeated calls from the systray application to the daemon

Previously, the systray application called the daemon repeatedly to update the menu, even though the menu wasn't visible; this was due to a limitation in the systray component. When the menu was open, an event wasn’t sent. Now, instead of calling the daemon repeatedly, it’s called when the systray opens. This saves space in the connector log and makes the systray application more stable and less demanding on resources.

Fixed an issue that caused an incorrect priority for a Docker Compose Specification service

The environment declared in a Docker Compose Specification service used as an intercept handler had a lower priority than the environment declared in the container that was intercepted. This caused the Docker Compose environment to be overwritten. Now, the Docker Compose environment has higher priority.

The following is the priority order when merging the environment:

- Environment in the handler of the Intercept Specification

- Environment in the service declaration in the Docker Compose Specification

- Environment in the intercepted container

Fixed an issue that caused a crash in Traffic Manager when configured with agentInjector.enabled=false

A Traffic Manager that was installed with the Helm value

agentInjector.enabled=false crashed when a client used the commands telepresence version or telepresence status. The commands called a method on the Traffic Manager that panicked if a Traffic Agent wasn't present. Now, this method returns the standard Unavailable error code, which is expected by the caller.Fixed an issue that didn’t allow a comma-separated list of daemons for the gather-logs command

The name of the

telepresence gather-logs flag --daemons suggested that the argument could contain more than one daemon, but it couldn't. Now, you can use a comma-separated list (for example, telepresence gather-logs --daemons root,user).Fixed an issue where the Traffic Agent would route traffic to localhost during periods when an intercept wasn't active

This issue made it impossible for an application to bind to the Pod's IP, and it also meant that service meshes binding to the podIP would get bypassed, both during and after an intercept had been made. Now, the Traffic Agent forwards non-intercepted requests to the Pod's IP, thereby enabling the application to either bind to localhost or to that IP.

Fixed an issue where the root daemon wouldn't start when the sudo timeout was set to zero

The root daemon wouldn’t start when sudo was configured with a

timestamp_timeout=0. The logic first requested root privileges using a sudo call and then relied on the caching of these privileges so that a subsequent call using --non-interactive was guaranteed to succeed. Now, the logic includes a single sudo call and solely rely on sudo to print an informative prompt and start the daemon in the background.Fixed an issue where a telepresence connect --docker failed when attempting to connect to a minikube that uses a docker driver

This issue occurred because the containerized daemon didn’t have access to the minikube docker network. Telepresence now detects an attempt to connect to that network and attach it to the daemon container as needed.

Fixed an issue that caused a race condition in the Traffic Agent injector when using inject annotation

Applying multiple deployments that used the

telepresence.getambassador.io/inject-traffic-agent: enabled would cause a race condition. This resulted in a large number of new pods that eventually had to be deleted, or sometimes in pods that didn't contain a Traffic Agent.Fixed an issue that caused the incorrect use of a custom agent security context

The Traffic Manager helm chart now correctly uses a custom agent security context if one is provided.

Version 2.19.6

Panic in traffic-manager when using Istio integration with sidecars w/o a workloadSelector

A traffic-manager that was installed with

--set trafficManager.serviceMesh.type=istio would panic if it encountered an istio Sidecar definition that didn't have a workloadSelector declared.Fix bug in workload cache, causing endless recursion when a workload uses the same name as its owner.

The workload cache was keyed by name and namespace, but not by kind, so a workload named the same as its owner workload would be found using the same key. This led to the workload finding itself when looking up its owner, which in turn resulted in an endless recursion when searching for the topmost owner.

FailedScheduling events mentioning node availability considered fatal when waiting for agent to arrive.

The traffic-manager considers some events as fatal when waiting for a traffic-agent to arrive after an injection has been initiated. This logic would trigger on events like "Warning FailedScheduling 0/63 nodes are available" although those events indicate a recoverable condition and kill the wait. This is now fixed so that the events are logged but the wait continues.

Version 2.19.5 (May 15, 2024)

Prevent bad cloud-daemon behavior when using WSL.

The cloud-daemon was not usable on a Linux box where the dbus was unavailable. The systray panicked (unless disabled) and the notifier exited with an error, resulting in that the cloud-daemon also exited.

Docker aliases deprecation caused failure to detect Kind cluster.

The logic for detecting if a cluster is a local Kind cluster, and therefore needs some special attention when using

telepresence connect --docker, relied on the presence of Aliases in the Docker network that a Kind cluster sets up. In Docker versions from 26 and up, this value is no longer used, but the corresponding info can instead be found in the new DNSNames field.Version 2.19.4 (April 23, 2024)

Creation of individual pods was blocked by the agent-injector webhook.

An attempt to create a pod was blocked unless it was provided by a workload. Hence, commands like

kubectl run -i busybox --rm --image=curlimages/curl --restart=Never -- curl echo-easy.default would be blocked from executing.Version 2.19.3 (April 11, 2024)

Fix panic due to root daemon not running.

If a

telepresence connect was made at a time when the root daemon was not running (an abnormal condition) and a subsequent intercept was then made, a panic would occur when the port-forward to the agent was set up. This is now fixed so that the initial telepresence connect is refused unless the root daemon is running.Version 2.19.2 (April 05, 2024)

Fix Cloud-daemon termination when update is available.

The cloud-daemon terminated when it detected that an update was available, which resulted in a telepresence disconnect from the cluster.

Version 2.19.1 (April 04, 2024)

Get rid of telemount plugin stickiness

The

datawire/telemount that is automatically downloaded and installed, would never be updated once the installation was made. Telepresence will now check for the latest release of the plugin and cache the result of that check for 24 hours. If a new version arrives, it will be installed and used.Use route instead of address for CIDRs with masks that don't allow "via"

A CIDR with a mask that leaves less than two bits (/31 or /32 for IPv4) cannot be added as an address to the VIF, because such addresses must have bits allowing a "via" IP.

The logic was modified to allow such CIDRs to become static routes, using the VIF base address as their "via", rather than being VIF addresses in their own right.

Containerized daemon created cache files owned by root

When using

telepresence connect --docker to create a containerized daemon, that daemon would sometimes create files in the cache that were owned by root, which then caused problems when connecting without the --docker flag.Remove large number of requests when traffic-manager is used in large clusters.

The traffic-manager would make a very large number of API requests during cluster start-up or when many services were changed for other reasons. The logic that did this was refactored and the number of queries were significantly reduced.

Don't patch probes on replaced containers.

A container that is being replaced by a

telepresence intercept --replace invocation will have no liveness-, readiness, nor startup-probes. Telepresence didn't take this into consideration when injecting the traffic-agent, but now it will refrain from patching symbolic port names of those probes.Don't rely on context name when deciding if a kind cluster is used.

The code that auto-patches the kubeconfig when connecting to a kind cluster from within a docker container, relied on the context name starting with "kind-", but although all contexts created by kind have that name, the user is still free to rename it or to create other contexts using the same connection properties. The logic was therefore changed to instead look for a loopback service address.

Version 2.19.0 (February 12, 2024)

Add ability to configure the systray application.

The systray application can now be configured via a

systray entry in the config.yml file. The entry has the properties enabled (defaults to true), includeContexts (list of kubernetes contexts to continuously scan for namespaces, where empty means all contexts configured in the current KUBECONFIG), and excludeContexts (kubernetes contexts to exclude).Include the image for the traffic-agent in the output of the version and status commands.

The version and status commands will now output the image that the traffic-agent will be using when injected by the agent-injector.

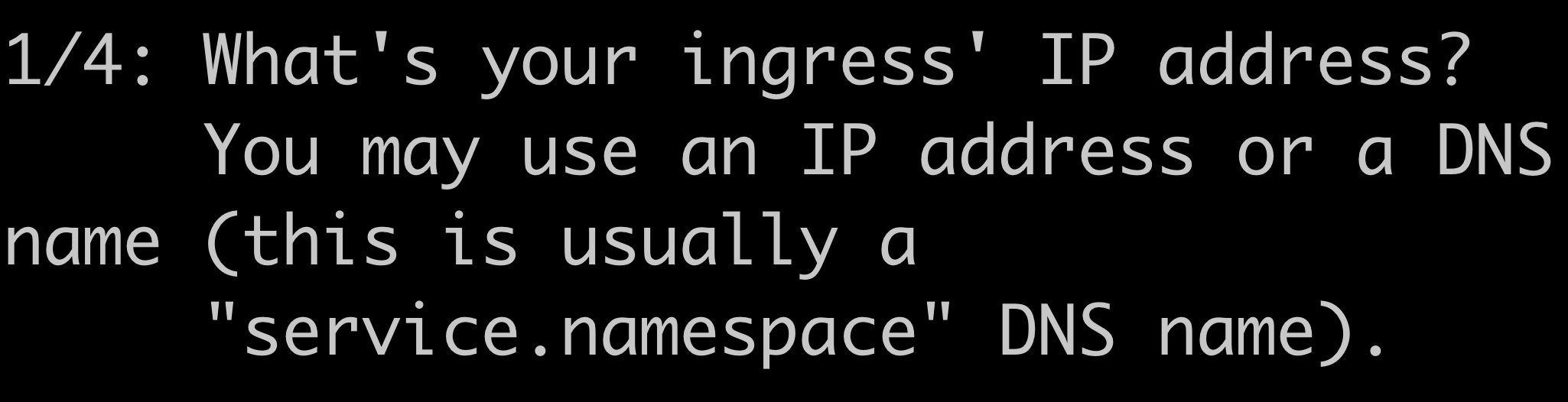

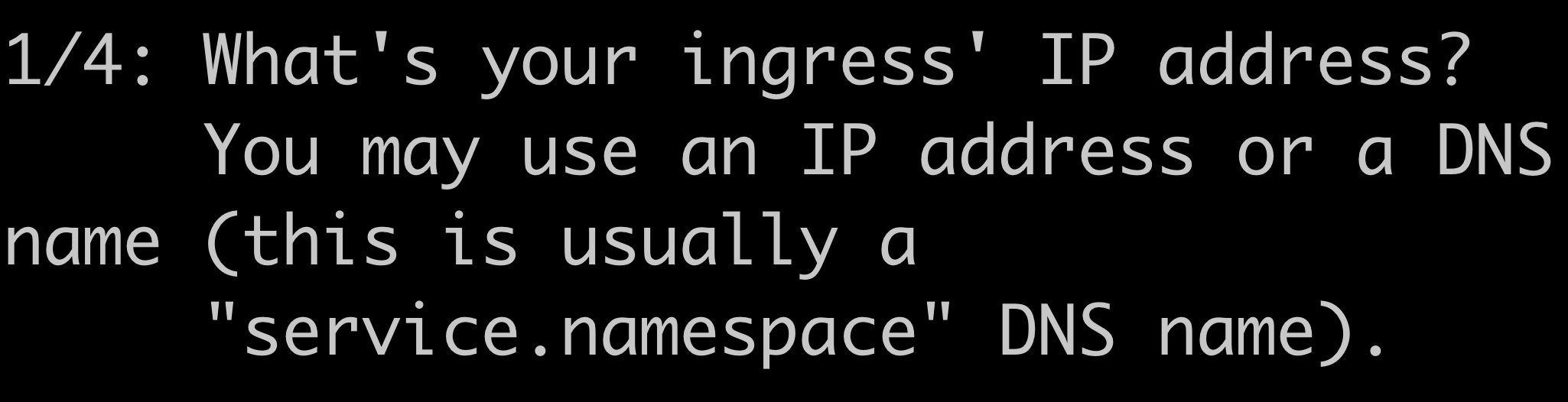

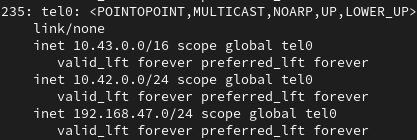

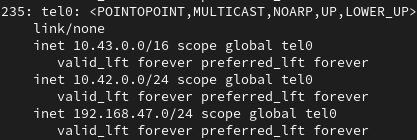

Custom DNS using the client DNS resolver.

A new telepresence connect --proxy-via CIDR=WORKLOAD flag was introduced, allowing Telepresence to translate DNS responses matching specific subnets into virtual IPs that are used locally. Those virtual IPs are then routed (with reverse translation) via the pod's of a given workload. This makes it possible to handle custom DNS servers that resolve domains into loopback IPs.

Make namespace-id command default to the manager's namespace.

The command

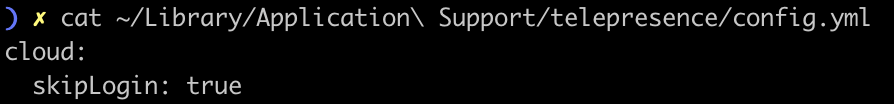

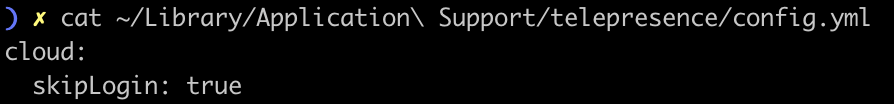

telepresence namespace-id will no longer require a --namespace flag. When not given, the command will default to the namespace of where the traffic-manager is installed or will be installed by default.Never ask for login when traffic-manager is licensed.

A licensed traffic-manager does not require that a connecting user is logged in, and telepresence will therefore no longer prompt a user to login when connecting to it.

The

skipLogin configuration is no longer relevant when the traffic-manager is licensed, instead it will just suppress the login-prompt when a login is required, so that an error is printed instead.The telepresence license --id command was broken

The

telepresence license --id <namespace-id> reported a conflict with the --host-domain flag and exited, regardless of the setting of that flag.Make agent registry, name, and tag configurable individually.

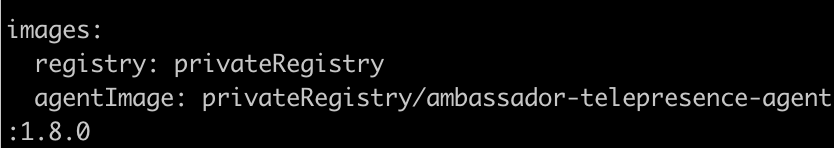

Prior to this change, it was not possible to just provide a new tag or a new registry for the agent image in the Helm chart and have that override the default fetched from SystemA. Now all three parts can be provided individually or in combinations. An attempt will be made to fetch the preferred image from Ambassador Cloud unless all three are provided.

Make cloud-daemon functional without a user-interface.

Running the cloud-daemon without a user interface resulted in errors being generated in its log. Trying to run the cloud-daemon in a docker container made it fail and exit (no dbus available). This is now fixed by making the cloud-daemon sensitive to the environment in which it executes so that the systray-app and the notifier never starts unless a UI is present.

Include non-default zero values in output of telepresence config view.

The

telepresence config view command will now print zero values in the output when the default for the value is non-zero.Restore ability to run the telepresence CLI in a docker container.

The improvements made to be able to run the telepresence daemon in docker using

telepresence connect --docker made it impossible to run both the CLI and the daemon in docker. This commit fixes that and also ensures that the user- and root-daemons are merged in this scenario when the container runs as root.Remote mounts when intercepting with the --replace flag.

A

telepresence intercept --replace did not correctly mount all volumes, because when the intercepted container was removed, its mounts were no longer visible to the agent-injector when it was subjected to a second invocation. The container is now kept in place, but with an image that just sleeps infinitely.Intercepting with the --replace flag will no longer require all subsequent intercepts to use --replace.

A

telepresence intercept --replace will no longer switch the mode of the intercepted workload, forcing all subsequent intercepts on that workload to use --replace until the agent is uninstalled. Instead, --replace can be used interchangeably just like any other intercept flag.Kubeconfig exec authentication with context names containing colon didn't work on Windows

The logic added to allow the root daemon to connect directly to the cluster using the user daemon as a proxy for exec type authentication in the kube-config, didn't take into account that a context name sometimes contains the colon ":" character. That character cannot be used in filenames on windows because it is the drive letter separator.

Provide agent name and tag as separate values in Helm chart

The

AGENT_IMAGE was a concatenation of the agent's name and tag. This is now changed so that the env instead contains an AGENT_IMAGE_NAME and AGENT_INAGE_TAG. The AGENT_IMAGE is removed. Also, a new env REGISTRY is added, where the registry of the traffic- manager image is provided. The AGENT_REGISTRY is no longer required and will default to REGISTRY if not set.Environment interpolation expressions were prefixed twice.

Telepresence would sometimes prefix environment interpolation expressions in the traffic-agent twice so that an expression that looked like

$(SOME_NAME) in the app-container, ended up as $(_TEL_APP_A__TEL_APP_A_SOME_NAME) in the corresponding expression in the traffic-agent.Panic in root-daemon on darwin workstations with full access to cluster network.

A darwin machine with full access to the cluster's subnets will never create a TUN-device, and a check was missing if the device actually existed, which caused a panic in the root daemon.

Version 2.18.1 (December 27, 2023)

False conflict when intercepting multiple services with the same service port number that targeted the same pod.

Telepresence would report that two intercepts on different service ports were conflicting if those ports used the same service port number and targeted the same pod.

Show allow-conflicting-subnets in telepresence status and telepresence config view.

The

telepresence status and telepresence config view commands didn't show the allowConflictingSubnets CIDRs because the value wasn't propagated correctly to the CLI.Version 2.18.0 (December 15, 2023)

Multiple connections in the Intercept Specification.

The Intercept Specification can now accommodate multiple connections, making it possible to have simultaneous intercepts that make use of different Kubernetes contexts and/or namespaces.

New properties added to the Connection object of the Intercept Spec.

The properties

Expose, Hostname, and AllowConflictingSubnets was added to the Connection object of the Internet Specification.The directory of the Intercept Specification is now the default docker context.

An Intercept Specification that references a Docker build or a Docker compose Service that declares a build, will now set the default context for such builds to the directory from where the Intercept Specification was loaded.

The tray application can manage multiple connections and docker mode.

The tray application can now manage multiple connections and it is possible to choose if new connections should be using a containerized daemon or a daemon running on the host.

Permit templates in the JSON-schema for the Intercept Specification.

The JSON-schema for the Intercept Specification will now allow most properties to contain template specifications in the form

{{<template spec>}}. The exception is properties that are used for referencing other objects within the specification.The published- and target-port were swapped when exposing Docker compose Service ports.

The

socat container that deals with exposing docker compose ports by bridging the "telepresence" network to the daemon container network had the published- and target-port swapped, making it impossible to user declarations where the two numbers differed.It is now possible use a host-based connection and containerized connections simultaneously.

Only one host-based connection can exist because that connection will alter the DNS to reflect the namespace of the connection. but it's now possible to create additional connections using

--docker while retaining the host-based connection.Ability to set the hostname of a containerized daemon.

The hostname of a containerized daemon defaults to be the container's ID in Docker. You now can override the hostname using

telepresence connect --docker --hostname <a name>.New --multi-daemon flag to enforce a consistent structure for the status command output.

The output of the

telepresence status when using --output json or --output yaml will either show an object where the user_daemon and root_daemon are top level elements, or when multiple connections are used, an object where a connections list contains objects with those daemons. The flag --multi-daemon will enforce the latter structure even when only one daemon is connected so that the output can be parsed consistently. The reason for keeping the former structure is to retain backward compatibility with existing parsers.Make output from telepresence quit more consistent.

A quit (without -s) just disconnects the host user and root daemons but will quit a container based daemon. The message printed was simplified to remove some have/has is/are errors caused by the difference.

Fix errors about a bad TLS certificate when refreshing the mutator-webhook secret.

The

agent-injector service will now refresh the secret used by the mutator-webhook each time a new connection is established, thus preventing the certificates to go out-of-sync when the secret is regenerated.Keep telepresence-agents configmap in sync with pod states.

An intercept attempt that resulted in a timeout due to failure of injecting the traffic-agent left the

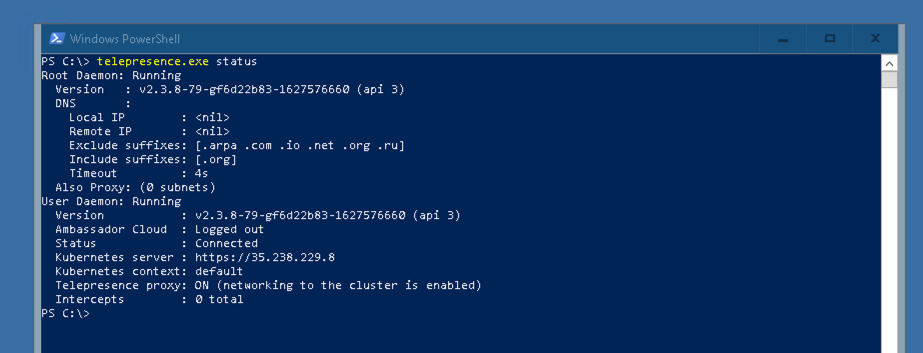

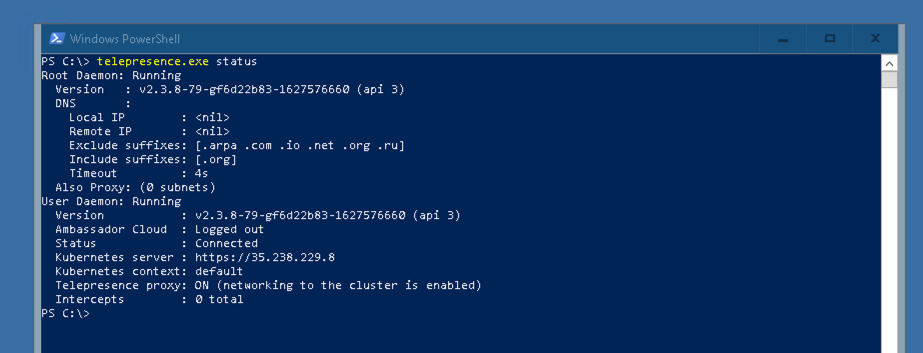

telepresence-agents configmap in a state that indicated that an agent had been added, which caused problems for subsequent intercepts after the problem causing the first failure had been fixed.The telepresence status command will now report the status of all running daemons.

A

telepresence status, issued when multiple containerized daemons were active, would error with "multiple daemons are running, please select one using the --use <match> flag". This is now fixed so that the command instead reports the status of all running daemons.The telepresence version command will now report the version of all running daemons.

A

telepresence version, issued when multiple containerized daemons were active, would error with "multiple daemons are running, please select one using the --use <match> flag". This is now fixed so that the command instead reports the version of all running daemons.Multiple containerized daemons can now be disconnected using telepresence quit -s.

A

telepresence quit -s, issued when multiple containerized daemons were active, would error with "multiple daemons are running, please select one using the --use <match> flag". This is now fixed so that the command instead quits all daemons.Version 2.17.1 (November 29, 2023)

Intercepting services with the same name but different namespaces caused a conflict.

The intercept conflict detection mechanism introduced in 2.17.0 incorrectly claimed that global intercepts on services with the same name, but in different namespaces, interfered with each-other.

The DNS search path on Windows is now restored when Telepresence quits

The DNS search path that Telepresence uses to simulate the DNS lookup functionality in the connected cluster namespace was not removed by a

telepresence quit, resulting in connectivity problems from the workstation. Telepresence will now remove the entries that it has added to the search list when it quits.The user-daemon would sometimes get killed when used by multiple simultaneous CLI clients.

The user-daemon would die with a fatal "fatal error: concurrent map writes" error in the

connector.log, effectively killing the ongoing connection.Multiple services ports using the same target port would not get intercepted correctly.

Intercepts didn't work when multiple service ports were using the same container port. Telepresence would think that one of the ports wasn't intercepted and therefore disable the intercept of the container port.

Root daemon refuses to disconnect.

The root daemon would sometimes hang forever when attempting to disconnect due to a deadlock in the VIF-device.

Fix panic in user daemon when traffic-manager was unreachable

The user daemon would panic if the traffic-manager was unreachable. It will now instead report a proper error to the client.

Removal of problematic backward support for versions predating 2.6.0.

The telepresence helm installer will no longer make attempts to discover and convert workloads that were modified by the telepresence client in versions prior to 2.6.0. Users have reported problems with the job performing this discovery, and it was therefore removed from the helm chart because the support for those versions was dropped some time ago.

Version 2.17.0 (November 14, 2023)

Telepresence now has a native Istio integration.

Telepresence can now create Istio objects to manage traffic, preventing conflicts between its configuration and your mesh's networking configuration.

Telepresence will detect intercept conflicts.

When creating a personal intercept, if a header conflicts with those from an existing one, Telepresence will return an error indicating which headers are causing the issue. For instance, if you use the command

--http-header a=b --http-header c=d, it will throw an error if there is another intercept already using the header --http-header a=b. Furthermore, it will now also detect a global intercept interference.The "context" field wasn't parsed when executing a specification with Docker Compose.

When executing an intercept specification with the Docker Compose integration, the

context field was not correctly interpreted. Consequently, it was impossible to specify the path to a Compose file from any location other than the working directory.The cloud daemon should not start when calling Telepresence status.

The cloud daemon was started when calling Telepresence status while this command should not trigger any action.

Cloud daemon running state added to the status command.

Calling

telepresence status will now display if the cloud daemon is running or not, including in JSON & YAML formats.Changed "current-cluster-id" command to "namespace-id".

namespace-id should now be used to get IDs for licenses. --namespace should be used to identify the namespace of the telepresence installationOption to return an error when a personal intercept expires.

A new option,

--set=intercept.expiredNotifications=true has been introduced in the traffic manager. This option is disabled by default. When enabled, it ensures that any request utilizing a personal header associated with an expired intercept will result in an error (status code 503). This change aims to eliminate potential confusion that may arise when a user requests an endpoint, believing that the intercept is still active when it has actually expired. This feature is only compatible with traffic agent versions greater than or equal to v1.14.0Fix the documentation link displayed in the help command.

The command was previously directing users to the OSS website instead of the proprietary documentation.

Additional Prometheus metrics to track intercept/connect activity

This feature adds the following metrics to the Prometheus endpoint:

connect_count, connect_active_status, intercept_count, and intercept_active_statusintercept_count metric has been renamed to active_intercept_count for clarity.Make the Telepresence client docker image configurable.

The docker image used when running a Telepresence intercept in docker mode can now be configured using the setting

images.clientImage and will default first to the value of the environment TELEPRESENCE_CLIENT_IMAGE, and then to the value preset by the telepresence binary. This configuration setting is primarily intended for testing purposes.Use traffic-agent port-forwards for outbound and intercepted traffic.

The telepresence TUN-device is now capable of establishing direct port-forwards to a traffic-agent in the connected namespace. That port-forward is then used for all outbound traffic to the device, and also for all traffic that arrives from intercepted workloads. Getting rid of the extra hop via the traffic-manager improves performance and reduces the load on the traffic-manager. The feature can only be used if the client has Kubernetes port-forward permissions to the connected namespace. It can be disabled by setting

cluster.agentPortForward to false in config.yml.Improve outbound traffic performance.

The root-daemon now communicates directly with the traffic-manager instead of routing all outbound traffic through the user-daemon. The root-daemon uses a patched kubeconfig where

exec configurations to obtain credentials are dispatched to the user-daemon. This to ensure that all authentication plugins will execute in user-space. The old behavior of routing everything through the user-daemon can be restored by setting cluster.connectFromRootDaemon to false in config.yml.New networking CLI flag --allow-conflicting-subnets

telepresence connect (and other commands that kick off a connect) now accepts an --allow-conflicting-subnets CLI flag. This is equivalent to client.routing.allowConflictingSubnets in the helm chart, but can be specified at connect time. It will be appended to any configuration pushed from the traffic manager.

Warn if large version mismatch between traffic manager and client.

Print a warning if the minor version diff between the client and the traffic manager is greater than three.

The authenticator binary was removed from the docker image.

The

authenticator binary, used when serving proxied exec kubeconfig credential retrieval, has been removed. The functionality was instead added as a subcommand to the telepresence binary.Version 2.16.1 (October 12, 2023)

Use different names for the Telepresence client image in OSS and PRO

Both images used the name "telepresence", causing the OSS image to be replaced by the PRO image. The PRO client image is now renamed to "ambassador-telepresence".

Some <code>--http-xxx</code> flags didn't get propagated correctly to the traffic-agent.

the whole list of

--http flags would get replaced with --http-header=auto unless an --http-header was given. As a result, flags like --http-plaintext were discarded.Add <code>--docker-debug</code> flag to the <code>telepresence intercept</code> command.

This flag is similar to

--docker-build but will start the container with more relaxed security using the docker run flags --security-opt apparmor=unconfined --cap-add SYS_PTRACE.Add a <code>--export</code> option to the <code>telepresence connect</code> command.

In some situations it is necessary to make some ports available to the host from a containerized telepresence daemon. This commit adds a repeatable

--expose <docker port exposure> flag to the connect command.Prevent agent-injector webhook from selecting from kube-xxx namespaces.

The

kube-system and kube-node-lease namespaces should not be affected by a global agent-injector webhook by default. A default namespaceSelector was therefore added to the Helm Chart agentInjector.webhook that contains a NotIn preventing those namespaces from being selected.Backward compatibility for pod template TLS annotations.

Users of Telepresence < 2.9.0 that make use of the pod template TLS annotations were unable to upgrade because the annotation names have changed (now prefixed by "telepresence."), and the environment expansion of the annotation values was dropped. This fix restores support for the old names (while retaining the new ones) and the environment expansion.

Built with go 1.21.3

Built Telepresence with go 1.21.3 to address CVEs.

Match service selector against pod template labels

When listing intercepts (typically by calling

telepresence list) selectors of services are matched against workloads. Previously the match was made against the labels of the workload, but now they are matched against the labels pod template of the workload. Since the service would actually be matched against pods this is more correct. The most common case when this makes a difference is that statefulsets now are listed when they should.Version 2.16.0 (October 02, 2023)

Intercepts can now replace running containers

It's now possible to stop a pod's container from running while the pod is intercepted. Once you leave the intercept, the pod will be restarted with its application container restored.

New "external" handler type in the Intercept Specification.

A new "external" handler type, primarily intended for integration purposes, was added to the Intercept Specification. This handler will emit all information needed to start a process that will handle intercepted traffic to a given output, but will not actually run anything.

System Tray Icon and Menu

A new telepresence icon and menu will appear in your system tray. The menu options are connect, disconnect, leave an intercept, and quit.

A telepresence quit -s will now also quit the cloud-daemon.

The cloud-daemon is designed to have the same life-cycle as the login, and did therefore only exit when the user logged out. This is now changed so that it also exits when running

telepresence quit --stop-daemons.The helm sub-commands will no longer start the user daemon.

-> The

telepresence helm install/upgrade/uninstall commands will no longer start the telepresence user daemon because there's no need to connect to the traffic-manager in order for them to execute.Routing table race condition

-> A race condition would sometimes occur when a Telepresence TUN device was deleted and another created in rapid succession that caused the routing table to reference interfaces that no longer existed.

Stop lingering daemon container

When using

telepresence connect --docker, a lingering container could be present, causing errors like "The container name NN is already in use by container XX ...". When this happens, the connect logic will now give the container some time to stop and then call docker stop NN to stop it before retrying to start it.Add file locking to the Telepresence cache

Files in the Telepresence cache are accesses by multiple processes. The processes will now use advisory locks on the files to guarantee consistency.

Lock connection to namespace

The behavior changed so that a connected Telepresence client is bound to a namespace. The namespace can then not be changed unless the client disconnects and reconnects. A connection is also given a name. The default name is composed from

<kube context name>-<namespace> but can be given explicitly when connecting using --name. The connection can optionally be identified using the option --use <name match> (only needed when docker is used and more than one connection is active).Deprecation of global --context and --docker flags.

The global flags

--context and --docker will now be considered deprecated unless used with commands that accept the full set of Kubernetes flags (e.g. telepresence connect).Deprecation of the --namespace flag for the intercept command.

The

--namespace flag is now deprecated for telepresence intercept command. The flag can instead be used with all commands that accept the full set of Kubernetes flags (e.g. telepresence connect).Legacy code predating version 2.6.0 was removed.

-> The telepresence code-base still contained a lot of code that would modify workloads instead of relying on the mutating webhook installer when a traffic-manager version predating version 2.6.0 was discovered. This code has now been removed.

Add `telepresence list-namespaces` and `telepresence list-contexts` commands

These commands can be used to check accessible namespaces and for automation.

Implicit connect warning

A deprecation warning will be printed if a command other than

telepresence connect causes an implicit connect to happen. Implicit connects will be removed in a future release.Version 2.15.1 (September 06, 2023)

Rebuild with go 1.21.1

Rebuild Telepresence with go 1.21.1 to address CVEs.

Set security context for traffic agent

Openshift users reported that the traffic agent injection was failing due to a missing security context.

Version 2.15.0 (August 28, 2023)

When logging out you will now automatically be disconnected

With the change of always being required to login for Telepresence commands, you will now be disconnected from any existing sessions when logging out.

Add ASLR to binaries not in docker

Addresses PEN test issue.

Ensure that the x-telepresence-intercept-id header is read-only.

The system assumes that the

x-telepresence-intercept-id header contains the ID of the intercept when it is present, and attempts to redefine it will now result in an error instead of causing a malfunction when using preview URLs.Fix parsing of multiple --http-header arguments

An intercept using multiple header flags, e.g.

--http-header a=b --http-header x=y would assemble them incorrectly into one header as --http-header a=b,x=y which were then interpreted as a match for the header a with value b,x=y.Fixed bug in telepresence status when apikey login fails

A bug was found when the docker-desktop extension would issue a telepresence status command with an expired or invalid apikey. This would cause the extension to get stuck in an authentication loop. This bug was addressed and resolved.

Version 2.14.4 (August 23, 2023)

Nil pointer exception when upgrading the traffic-manager.

Upgrading the traffic-manager using

telepresence helm upgrade would sometimes result in a helm error message executing "telepresence/templates/intercept-env-configmap.yaml" at <.Values.intercept.environment.excluded>: nil pointer evaluating interface {}.excluded"

Version 2.14.2 (July 26, 2023)

Incorporation of the last version of Telepresence.

A new version of Telepresence OSS was published.

Version 2.14.1 (July 07, 2023)

More flexible templating in the Intercept Speficiation.

The Sprig template functions can now be used in many unconstrained fields of an Intercept Specification, such as environments, arguments, scripts, commands, and intercept headers.

User daemon would panic during connect

An attempt to connect on a host where no login has ever been made, could cause the user daemon to panic.

Version 2.14.0 (June 12, 2023)

Telepresence with Docker Compose

Telepresence now is integrated with Docker Compose. You can now use a compose file as an Intercept Handler in your Intercept Specifcations to utilize you local dev stack alongside an Intercept.

Added the ability to exclude envrionment variables

You can now configure your traffic-manager to exclude certain environment variables from being propagated to your local environment while doing an intercept.

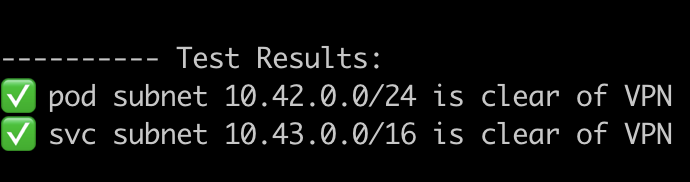

Routing conflict reporting.

Telepresence will now attempt to detect and report routing conflicts with other running VPN software on client machines. There is a new configuration flag that can be tweaked to allow certain CIDRs to be overridden by Telepresence.

Migration of Pod Daemon to the proprietary version of Telepresence

Pod Daemon has been successfully integrated with the most recent proprietary version of Telepresence. This development allows users to leverage the datawire/telepresence image for their deployment previews. This enhancement streamlines the process, improving the efficiency and effectiveness of deployment preview scenarios.

Version 2.13.3 (May 25, 2023)

Add imagePullSecrets to hooks

Add .Values.hooks.curl.imagePullSecrets and .Values.hooks curl.imagePullSecrets to Helm values.

Change reinvocation policy to Never for the mutating webhook

The default setting of the reinvocationPolicy for the mutating webhook dealing with agent injections changed from Never to IfNeeded.

Fix mounting fail of IAM roles for service accounts web identity token

The eks.amazonaws.com/serviceaccount volume injected by EKS is now exported and remotely mounted during an intercept.

Correct namespace selector for cluster versions with non-numeric characters

The mutating webhook now correctly applies the namespace selector even if the cluster version contains non-numeric characters. For example, it can now handle versions such as Major:"1", Minor:"22+".

Enable IPv6 on the telepresence docker network

The "telepresence" Docker network will now propagate DNS AAAA queries to the Telepresence DNS resolver when it runs in a Docker container.

Fix the crash when intercepting with --local-only and --docker-run

Running telepresence intercept --local-only --docker-run no longer results in a panic.

Fix incorrect error message with local-only mounts

Running telepresence intercept --local-only --mount false no longer results in an incorrect error message saying "a local-only intercept cannot have mounts".

specify port in hook urls

The helm chart now correctly handles custom agentInjector.webhook.port that was not being set in hook URLs.

Fix wrong default value for disableGlobal and agentArrival

Params .intercept.disableGlobal and .timeouts.agentArrival are now correctly honored.

Version 2.13.2 (May 12, 2023)

Authenticator Service Update

Replaced / characters with a - when the authenticator service creates the kubeconfig in the Telepresence cache.

Enhanced DNS Search Path Configuration for Windows (Auto, PowerShell, and Registry Options)

Configurable strategy (auto, powershell. or registry) to set the global DNS search path on Windows. Default is auto which means try powershell first, and if it fails, fall back to registry.

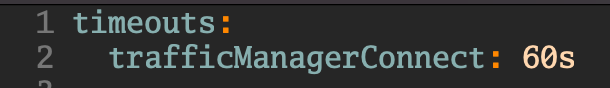

Configurable Traffic Manager Timeout in values.yaml

The timeout for the traffic manager to wait for traffic agent to arrive can now be configured in the values.yaml file using timeouts.agentArrival. The default timeout is still 30 seconds.

Enhanced Local Cluster Discovery for macOS and Windows

The automatic discovery of a local container based cluster (minikube or kind) used when the Telepresence daemon runs in a container, now works on macOS and Windows, and with different profiles, ports, and cluster names

FTP Stability Improvements

Multiple simultaneous intercepts can transfer large files in bidirectionally and in parallel.

Intercepted Persistent Volume Pods No Longer Cause Timeouts

Pods using persistent volumes no longer causes timeouts when intercepted.

Successful 'Telepresence Connect' Regardless of DNS Configuration

Ensure that `telepresence connect`` succeeds even though DNS isn't configured correctly.

Traffic-Manager's 'Close of Closed Channel' Panic Issue

The traffic-manager would sometimes panic with a "close of closed channel" message and exit.

Traffic-Manager's Type Cast Panic Issue

The traffic-manager would sometimes panic and exit after some time due to a type cast panic.

Login Friction

Improve login behavior by clearing the saved intermediary API Keys when a user logins to force Telepresence to generate new ones.

Version 2.13.1 (April 20, 2023)

Update ambassador-telepresence-agent to version 1.13.13

The malfunction of the Ambassador Telepresence Agent occurred as a result of an update which compressed the executable file.

Version 2.13.0 (April 18, 2023)

Better kind / minikube network integration with docker

The Docker network used by a Kind or Minikube (using the "docker" driver) installation, is automatically detected and connected to a Docker container running the Telepresence daemon.

New mapped namespace output

Mapped namespaces are included in the output of the telepresence status command.

Setting of the target IP of the intercept

There's a new --address flag to the intercept command allowing users to set the target IP of the intercept.

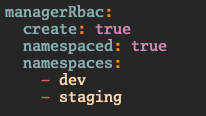

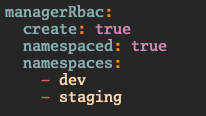

Multi-tenant support

The client will no longer need cluster wide permissions when connected to a namespace scoped Traffic Manager.

Cluster domain resolution bugfix

The Traffic Manager now uses a fail-proof way to determine the cluster domain.

Windows DNS

DNS on windows is more reliable and performant.

Agent injection with huge amount of deployments

The agent is now correctly injected even with a high number of deployment starting at the same time.

Self-contained kubeconfig with Docker

The kubeconfig is made self-contained before running Telepresence daemon in a Docker container.

Version command error

The version command won't throw an error anymore if there is no kubeconfig file defined.

Intercept Spec CRD v1alpha1 depreciated

Please use version v1alpha2 of the intercept spec crd.

Version 2.12.2 (April 04, 2023)

Update Golang build version to 1.20.3

Update Golang to 1.20.3 to address CVE-2023-24534, CVE-2023-24536, CVE-2023-24537, and CVE-2023-24538

Version 2.12.1 (March 22, 2023)

Additions to gather-logs

Telepresence now includes the kubeauth logs when running the gather-logs command

Airgapped Clusters can once again create personal intercepts

Telepresence on airgapped clusters regained the ability to use the skipLogin config option to bypass login and create personal intercepts.

Environment Variables are now propagated to kubeauth

Telepresence now propagates environment variables properly to the kubeauth-foreground to be used with cluster authentication

Version 2.12.0 (March 20, 2023)

Intercept spec can build images from source

Handlers in the Intercept Specification can now specify a

build property instead of an image so that the image is built when the spec runs.Improve volume mount experience for Windows and Mac users

On macOS and Windows platforms, the installation of sshfs or platform specific FUSE implementations such as macFUSE or WinFSP are no longer needed when running an Intercept Specification that uses docker images.

Check for service connectivity independently from pod connectivity

Telepresence now enables you to check for a service and pod's connectivity independently, so that it can proxy one without proxying the other.

Fix cluster authentication when running the telepresence daemon in a docker container.

Authentication to EKS and GKE clusters have been fixed (k8s >= v1.26)

The Intercept spec image pattern now allows nested and sha256 images.

Telepresence Intercept Specifications now handle passing nested images or the sha256 of an image

Fix panic when CNAME of kubernetes.default doesn't contain .svc

Telepresence will not longer panic when a CNAME does not contain the .svc in it

Version 2.11.1 (February 27, 2023)

Multiple architectures

The multi-arch build for the

ambassador-telepresence-manager and ambassador-telepresence-agent now works for both amd64 and arm64.Ambassador agent Helm chart duplicates

Some labels in the Helm chart for the Ambassador Agent were duplicated, causing problems for FluxCD.

Version 2.11.0 (February 22, 2023)

Intercept specification

It is now possible to leverage the intercept specification to spin up your environment without extra tools.

Support for arm64 (Apple Silicon)

The

ambassador-telepresence-manager and ambassador-telepresence-agent are now distributed as multi-architecture images and can run natively on both linux/amd64 and linux/arm64.Connectivity check can break routing in VPN setups

The connectivity check failed to recognize that the connected peer wasn't a traffic-manager. Consequently, it didn't proxy the cluster because it incorrectly assumed that a successful connect meant cluster connectivity,

VPN routes not detected by <code>telepresence test-vpn</code> on macOS

The

telepresence test-vpn did not include routes of type link when checking for subnet conflicts.Version 2.10.5 (February 06, 2023)

mTLS secrets mount

mTLS Secrets will now be mounted into the traffic agent, instead of expected to be read by it from the API. This is only applicable to users of team mode and the proprietary agent

Daemon reconnection fix

Fixed a bug that prevented the local daemons from automatically reconnecting to the traffic manager when the network connection was lost.

Version 2.10.4 (January 20, 2023)

Backward compatibility restored

Telepresence can now create intercepts with traffic-managers of version 2.9.5 and older.

Saved intercepts now works with preview URLs.

Preview URLs are now included/excluded correctly when using saved intercepts.

Version 2.10.3 (January 17, 2023)

Saved intercepts

Fixed an issue which was causing the saved intercepts to not be completely interpreted by telepresence.

Traffic manager restart during upgrade to team mode

Fixed an issue which was causing the traffic manager to be redeployed after an upgrade to the team mode.

Version 2.10.2 (January 16, 2023)

version consistency in helm commands

Ensure that CLI and user-daemon binaries are the same version when running telepresence helm install

or telepresence helm upgrade.Release Process

Fixed an issue that prevented the

--use-saved-intercept flag from working.Version 2.10.1 (January 11, 2023)

Release Process

Fixed a regex in our release process that prevented 2.10.0 promotion.

Version 2.10.0 (January 11, 2023)

Team Mode and Single User Mode

The Traffic Manager can now be set to either "team" mode or "single user" mode. When in team mode, intercepts will default to http intercepts.

Added `insert` and `upgrade` Subcommands to `telepresence helm`

The `telepresence helm` sub-commands `insert` and `upgrade` now accepts all types of helm `--set-XXX` flags.

Added Image Pull Secrets to Helm Chart

Image pull secrets for the traffic-agent can now be added using the Helm chart setting `agent.image.pullSecrets`.

Rename Configmap

The configmap `traffic-manager-clients` has been renamed to `traffic-manager`.

Webhook Namespace Field

If the cluster is Kubernetes 1.21 or later, the mutating webhook will find the correct namespace using the label `kubernetes.io/metadata.name` rather than `app.kuberenetes.io/name`.

Rename Webhook

The name of the mutating webhook now contains the namespace of the traffic-manager so that the webhook is easier to identify when there are multiple namespace scoped telepresence installations in the cluster.

OSS Binaries

The OSS Helm chart is no longer pushed to the datawire Helm repository. It will instead be pushed from the telepresence proprietary repository. The OSS Helm chart is still what's embedded in the OSS telepresence client.

Fix Panic Using `--docker-run`

Telepresence no longer panics when `--docker-run` is combined with `--name ` instead of `--name=`.

Stop assuming cluster domain

Telepresence traffic-manager extracts the cluster domain (e.g. "cluster.local") using a CNAME lookup for "kubernetes.default" instead of "kubernetes.default.svc".

Uninstall hook timeout

A timeout was added to the pre-delete hook `uninstall-agents`, so that a helm uninstall doesn't hang when there is no running traffic-manager.

Uninstall hook check

The `Helm.Revision` is now used to prevent that Helm hook calls are served by the wrong revision of the traffic-manager.

Version 2.9.5 (December 08, 2022)

Update to golang v1.19.4

Apply security updates by updating to golang v1.19.4

GCE authentication

Fixed a regression, that was introduced in 2.9.3, preventing use of gce authentication without also having a config element present in the gce configuration in the kubeconfig.

Version 2.9.4 (December 02, 2022)

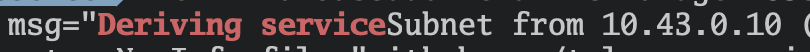

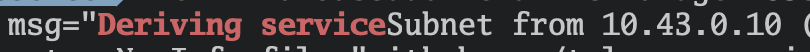

Subnet detection strategy

The traffic-manager can automatically detect that the node subnets are different from the pod subnets, and switch detection strategy to instead use subnets that cover the pod IPs.

Fix `--set` flag for `telepresence helm install`

The `telepresence helm` command `--set x=y` flag didn't correctly set values of other types than `string`. The code now uses standard Helm semantics for this flag.

Fix `agent.image` setting propigation

Telepresence now uses the correct `agent.image` properties in the Helm chart when copying agent image settings from the `config.yml` file.

Delay file sharing until needed

Initialization of FTP type file sharing is delayed, so that setting it using the Helm chart value `intercept.useFtp=true` works as expected.

Cleanup on `telepresence quit`

The port-forward that is created when Telepresence connects to a cluster is now properly closed when `telepresence quit` is called.

Watch `config.yml` without panic

The user daemon no longer panics when the `config.yml` is modified at a time when the user daemon is running but no session is active.

Thread safety

Fix race condition that would occur when `telepresence connect` `telepresence leave` was called several times in rapid succession.

Version 2.9.3 (November 23, 2022)

Helm options for `livenessProbe` and `readinessProbe`

The helm chart now supports `livenessProbe` and `readinessProbe` for the traffic-manager deployment, so that the pod automatically restarts if it doesn't respond.

Improved network communication

The root daemon now communicates directly with the traffic-manager instead of routing all outbound traffic through the user daemon.

Root daemon debug logging

Using `telepresence loglevel LEVEL` now also sets the log level in the root daemon.

Multivalue flag value propagation

Multi valued kubernetes flags such as `--as-group` are now propagated correctly.

Root daemon stability

The root daemon would sometimes hang indefinitely when quit and connect were called in rapid succession.

Base DNS resolver

Don't use `systemd.resolved` base DNS resolver unless cluster is proxied.

Version 2.9.2 (November 16, 2022)

Fix panic

Fix panic when connecting to an older traffic-manager.

Fix header flag

Fix an issue where the `http-header` flag sometimes wouldn't propagate correctly.

Version 2.9.1 (November 16, 2022)

Connect failures due to missing auth provider.

The regression in 2.9.0 that caused a `no Auth Provider found for name “gcp”` error when connecting was fixed.

Version 2.9.0 (November 15, 2022)

New command to view client configuration.

A new

telepresence config view was added to make it easy to view the current client configuration.Configure Clients using the Helm chart.

The traffic-manager can now configure all clients that connect through the

client: map in the values.yaml file.The Traffic manager version is more visible.

The command

telepresence version will now include the version of the traffic manager when the client is connected to a cluster.Command output in YAML format.

The global

--output flag now accepts both yaml and json.Deprecated status command flag

The

telepresence status --json flag is deprecated. Use telepresence status --output=json instead.Unqualified service name resolution in docker.

Unqualified service names now resolves OK from the docker container when using

telepresence intercept --docker-run.Output no longer mixes plaintext and json.

Informational messages that don't really originate from the command, such as "Launching Telepresence Root Daemon", or "An update of telepresence ...", are discarded instead of being printed as plain text before the actual formatted output when using the

--output=json.No more panic when invalid port names are detected.

A `telepresence intercept` of services with invalid port no longer causes a panic.

Proper errors for bad output formats.

An attempt to use an invalid value for the global

--output flag now renders a proper error message.Remove lingering DNS config on macOS.

Files lingering under

/etc/resolver as a result of ungraceful shutdown of the root daemon on macOS, are now removed when a new root daemon starts.Version 2.8.5 (November 2, 2022)

CVE-2022-41716

Updated Golang to 1.19.3 to address CVE-2022-41716.

Version 2.8.4 (November 2, 2022)

Release Process

This release resulted in changes to our release process.

Version 2.8.3 (October 27, 2022)

Ability to disable global intercepts.

Global intercepts (a.k.a. TCP intercepts) can now be disabled by using the new Helm chart setting

intercept.disableGlobal.Configurable mutating webhook port

The port used for the mutating webhook can be configured using the Helm chart setting

agentInjector.webhook.port.Mutating webhook port defaults to 443

The default port for the mutating webhook is now

443. It used to be 8443.Agent image configuration mandatory in air-gapped environments.

The traffic-manager will no longer default to use the

tel2 image for the traffic-agent when it is unable to connect to Ambassador Cloud. Air-gapped environments must declare what image to use in the Helm chart.Can now connect to non-helm installs

telepresence connect now works as long as the traffic manager is installed, even if it wasn't installed via >code>helm installcheck-vpn crash fixed

telepresence check-vpn no longer crashes when the daemons don't start properly.

Version 2.8.2 (October 15, 2022)

Reinstate 2.8.0

There was an issue downloading the free enhanced client. This problem was fixed, 2.8.0 was reinstated

Version 2.8.1 (October 14, 2022)

Rollback 2.8.0

Rollback 2.8.0 while we investigate an issue with ambassador cloud.

Version 2.8.0 (October 14, 2022)

Improved DNS resolver

The Telepresence DNS resolver is now capable of resolving queries of type

A, AAAA, CNAME, MX, NS, PTR, SRV, and TXT.New `client` structure in Helm chart

A new

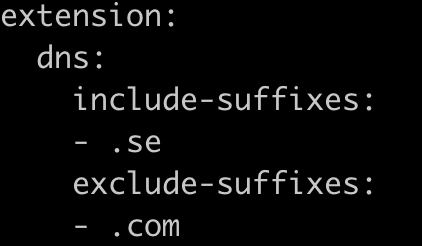

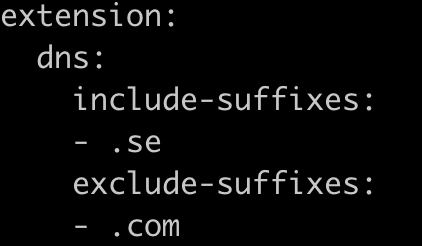

client struct was added to the Helm chart. It contains a connectionTTL that controls how long the traffic manager will retain a client connection without seeing any sign of life from the client.Include and exclude suffixes configurable using the Helm chart.

A dns element was added to the

client struct in Helm chart. It contains an includeSuffixes and an excludeSuffixes value that controls what type of names that the DNS resolver in the client will delegate to the cluster.Configurable traffic-manager API port

The API port used by the traffic-manager is now configurable using the Helm chart value

apiPort. The default port is 8081.Envoy server and admin port configuration.

An new

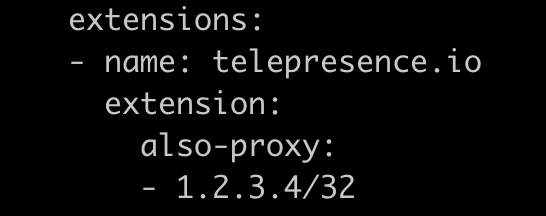

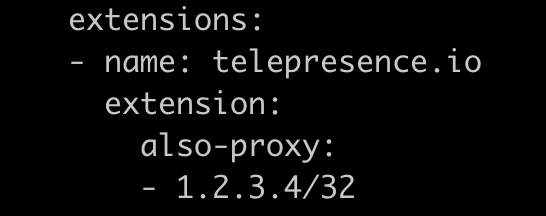

agent struct was added to the Helm chart. It contains an `envoy` structure where the server and admin port of the Envoy proxy running in the enhanced traffic-agent can be configured.Helm chart `dnsConfig` moved to `client.routing`.

The Helm chart

dnsConfig was deprecated but retained for backward compatibility. The fields alsoProxySubnets and neverProxySubnets can now be found under routing in the client struct.Helm chart `agentInjector.agentImage` moved to `agent.image`.

The Helm chart

agentInjector.agentImage was moved to agent.image. The old value is deprecated but retained for backward compatibility.Helm chart `agentInjector.appProtocolStrategy` moved to `agent.appProtocolStrategy`.

The Helm chart

agentInjector.appProtocolStrategy was moved to agent.appProtocolStrategy. The old value is deprecated but retained for backward compatibility.Helm chart `dnsServiceName`, `dnsServiceNamespace`, and `dnsServiceIP` removed.

The Helm chart

dnsServiceName, dnsServiceNamespace, and dnsServiceIP has been removed, because they are no longer needed. The TUN-device will use the traffic-manager pod-IP on platforms where it needs to dedicate an IP for its local resolver.Quit daemons with `telepresence quit -s`

The former options `-u` and `-r` for `telepresence quit` has been deprecated and replaced with one option `-s` which will quit both the root daemon and the user daemon.

Environment variable interpolation in pods now works.

Environment variable interpolation now works for all definitions that are copied from pod containers into the injected traffic-agent container.

Early detection of namespace conflict

An attempt to create simultaneous intercepts that span multiple namespace on the same workstation is detected early and prohibited instead of resulting in failing DNS lookups later on.

Annoying log message removed

Spurious and incorrect ""!! SRV xxx"" messages will no longer appear in the logs when the reason is normal context cancellation.

Single name DNS resolution in Docker on Linux host

Single label names now resolves correctly when using Telepresence in Docker on a Linux host

Misnomer `appPortStrategy` in Helm chart renamed to `appProtocolStrategy`.

The Helm chart value

appProtocolStrategy is now correctly named (used to be appPortStategy)Version 2.7.6 (September 16, 2022)

Helm chart resource entries for injected agents

The

resources for the traffic-agent container and the optional init container can be specified in the Helm chart using the resources and initResource fields of the agentInjector.agentImageCluster event propagation when injection fails

When the traffic-manager fails to inject a traffic-agent, the cause for the failure is detected by reading the cluster events, and propagated to the user.

FTP-client instead of sshfs for remote mounts

Telepresence can now use an embedded FTP client and load an existing FUSE library instead of running an external

sshfs or sshfs-win binary. This feature is experimental in 2.7.x and enabled by setting intercept.useFtp to true> in the config.yml.Upgrade of winfsp

Telepresence on Windows upgraded

winfsp from version 1.10 to 1.11Removal of invalid warning messages

Running CLI commands on Apple M1 machines will no longer throw warnings about

/proc/cpuinfo and /proc/self/auxv.Version 2.7.5 (September 14, 2022)

Rollback of release 2.7.4

This release is a rollback of the changes in 2.7.4, so essentially the same as 2.7.3

Version 2.7.4 (September 14, 2022)

This release was broken on some platforms. Use 2.7.6 instead.

Version 2.7.3 (September 07, 2022)

PTY for CLI commands

CLI commands that are executed by the user daemon now use a pseudo TTY. This enables

docker run -it to allocate a TTY and will also give other commands like bash read the same behavior as when executed directly in a terminal.Traffic Manager useless warning silenced

The traffic-manager will no longer log numerous warnings saying

Issuing a systema request without ApiKey or InstallID may result in an error.Traffic Manager useless error silenced

The traffic-manager will no longer log an error saying

Unable to derive subnets from nodes when the podCIDRStrategy is auto and it chooses to instead derive the subnets from the pod IPs.Version 2.7.2 (August 25, 2022)

Autocompletion scripts

Autocompletion scripts can now be generated with

telepresence completion SHELL where SHELL can be bash, zsh, fish or powershell.Connectivity check timeout

The timeout for the initial connectivity check that Telepresence performs in order to determine if the cluster's subnets are proxied or not can now be configured in the

config.yml file using timeouts.connectivityCheck. The default timeout was changed from 5 seconds to 500 milliseconds to speed up the actual connect.gather-traces feedback

The command

telepresence gather-traces now prints out a message on success.upload-traces feedback

The command

telepresence upload-traces now prints out a message on success.gather-traces tracing

The command

telepresence gather-traces now traces itself and reports errors with trace gathering.CLI log level

The

cli.log log is now logged at the same level as the connector.logTelepresence --help fixed

telepresence --help now works once more even if there's no user daemon running.Stream cancellation when no process intercepts

Streams created between the traffic-agent and the workstation are now properly closed when no interceptor process has been started on the workstation. This fixes a potential problem where a large number of attempts to connect to a non-existing interceptor would cause stream congestion and an unresponsive intercept.

List command excludes the traffic-manager

The

telepresence list command no longer includes the traffic-manager deployment.Version 2.7.1 (August 10, 2022)

Reinstate telepresence uninstall

Reinstate

telepresence uninstall with --everything depreciatedReduce telepresence helm uninstall

telepresence helm uninstall will only uninstall the traffic-manager helm chart and no longer accepts the --everything, --agent, or --all-agents flags.Auto-connect for telepresence intercpet

telepresence intercept will attempt to connect to the traffic manager before creating an intercept.Version 2.7.0 (August 07, 2022)

Saved Intercepts

Create telepresence intercepts based on existing Saved Intercepts configurations with

telepresence intercept --use-saved-intercept $SAVED_INTERCEPT_NAMEDistributed Tracing

The Telepresence components now collect OpenTelemetry traces. Up to 10MB of trace data are available at any given time for collection from components.

telepresence gather-traces is a new command that will collect all that data and place it into a gzip file, and telepresence upload-traces is a new command that will push the gzipped data into an OTLP collector.Helm install

A new

telepresence helm command was added to provide an easy way to install, upgrade, or uninstall the telepresence traffic-manager.Ignore Volume Mounts

The agent injector now supports a new annotation,

telepresence.getambassador.io/inject-ignore-volume-mounts, that can be used to make the injector ignore specified volume mounts denoted by a comma-separated string.telepresence pod-daemon

The Docker image now contains a new program in addition to the existing traffic-manager and traffic-agent: the pod-daemon. The pod-daemon is a trimmed-down version of the user-daemon that is designed to run as a sidecar in a Pod, enabling CI systems to create preview deploys.

Prometheus support for traffic manager

Added prometheus support to the traffic manager.

No install on telepresence connect

The traffic manager is no longer automatically installed into the cluster. Connecting or creating an intercept in a cluster without a traffic manager will return an error.

Helm Uninstall

The command

telepresence uninstall has been moved to telepresence helm uninstall.readOnlyRootFileSystem mounts work

Add an emptyDir volume and volume mount under

/tmp on the agent sidecar so it works with `readOnlyRootFileSystem: true`Version 2.6.8 (June 23, 2022)

Specify Your DNS

The name and namespace for the DNS Service that the traffic-manager uses in DNS auto-detection can now be specified.

Specify a Fallback DNS

Should the DNS auto-detection logic in the traffic-manager fail, users can now specify a fallback IP to use.

Intercept UDP Ports

It is now possible to intercept UDP ports with Telepresence and also use

--to-pod to forward UDP traffic from ports on localhost.Additional Helm Values

The Helm chart will now add the

nodeSelector, affinity and tolerations values to the traffic-manager's post-upgrade-hook and pre-delete-hook jobs.Agent Injection Bugfix

Telepresence no longer fails to inject the traffic agent into the pod generated for workloads that have no volumes and `automountServiceAccountToken: false`.

Version 2.6.7 (June 22, 2022)

Persistant Sessions

The Telepresence client will remember and reuse the traffic-manager session after a network failure or other reason that caused an unclean disconnect.

DNS Requests

Telepresence will no longer forward DNS requests for "wpad" to the cluster.

Graceful Shutdown

The traffic-agent will properly shut down if one of its goroutines errors.

Version 2.6.6 (June 9, 2022)

Env Var `TELEPRESENCE_API_PORT`

The propagation of the

TELEPRESENCE_API_PORT environment variable now works correctly.Double Printing `--output json`

The

--output json global flag no longer outputs multiple objectsVersion 2.6.5 (June 03, 2022)

Helm Option -- `reinvocationPolicy`

The

reinvocationPolicy or the traffic-agent injector webhook can now be configured using the Helm chart.Helm Option -- Proxy Certificate

The traffic manager now accepts a root CA for a proxy, allowing it to connect to ambassador cloud from behind an HTTPS proxy. This can be configured through the helm chart.

Helm Option -- Agent Injection

A policy that controls when the mutating webhook injects the traffic-agent was added, and can be configured in the Helm chart.

Windows Tunnel Version Upgrade

Telepresence on Windows upgraded wintun.dll from version 0.12 to version 0.14.1

Helm Version Upgrade

Telepresence upgraded its embedded Helm from version 3.8.1 to 3.9

Kubernetes API Version Upgrade

Telepresence upgraded its embedded Kubernetes API from version 0.23.4 to 0.24.1

Flag `--watch` Added to `list` Command

Added a

--watch flag to telepresence list that can be used to watch interceptable workloads in a namespace.Depreciated `images.webhookAgentImage`

The Telepresence configuration setting for `images.webhookAgentImage` is now deprecated. Use `images.agentImage` instead.

Default `reinvocationPolicy` Set to Never

The

reinvocationPolicy or the traffic-agent injector webhook now defaults to Never insteadof IfNeeded so that LimitRanges on namespaces can inject a missing resources element into the injected traffic-agent container.UDP

UDP based communication with services in the cluster now works as expected.

Telepresence `--help`

The command help will only show Kubernetes flags on the commands that supports them

Error Count

Only the errors from the last session will be considered when counting the number of errors in the log after a command failure.

Version 2.6.4 (May 23, 2022)

Upgrade RBAC Permissions

The traffic-manager RBAC grants permissions to update services, deployments, replicatsets, and statefulsets. Those permissions are needed when the traffic-manager upgrades from versions < 2.6.0 and can be revoked after the upgrade.

Version 2.6.3 (May 20, 2022)

Relative Mount Paths

The

--mount intercept flag now handles relative mount points correctly on non-windows platforms. Windows still require the argument to be a drive letter followed by a colon.Traffic Agent Config

The traffic-agent's configuration update automatically when services are added, updated or deleted.

Container Injection for Numeric Ports

Telepresence will now always inject an initContainer when the service's targetPort is numeric

Matching Services

Workloads that have several matching services pointing to the same target port are now handled correctly.

Unexpected Panic

A potential race condition causing a panic when closing a DNS connection is now handled correctly.

Mount Volume Cleanup

A container start would sometimes fail because and old directory remained in a mounted temp volume.

Version 2.6.2 (May 17, 2022)

Argo Injection

Workloads controlled by workloads like Argo

Rollout are injected correctly.Agent Port Mapping

Multiple services appointing the same container port no longer result in duplicated ports in an injected pod.

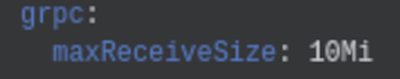

GRPC Max Message Size

The

telepresence list command no longer errors out with "grpc: received message larger than max" when listing namespaces with a large number of workloads.Version 2.6.1 (May 16, 2022)

KUBECONFIG environment variable

Telepresence will now handle multiple path entries in the KUBECONFIG environment correctly.

Don't Panic

Telepresence will no longer panic when using preview URLs with traffic-managers < 2.6.0

Version 2.6.0 (May 13, 2022)

Intercept multiple containers in a pod, and multiple ports per container

Telepresence can now intercept multiple services and/or service-ports that connect to the same pod.

The Traffic Agent sidecar is always injected by the Traffic Manager's mutating webhook

The client will no longer modify

deployments, replicasets, or statefulsets in order to inject a Traffic Agent into an intercepted pod. Instead, all injection is now performed by a mutating webhook. As a result, the client now needs less permissions in the cluster.Automatic upgrade of Traffic Agents

When upgrading, all workloads with injected agents will have their agent "uninstalled" automatically. The mutating webhook will then ensure that their pods will receive an updated Traffic Agent.

No default image in the Helm chart

The helm chart no longer has a default set for the

agentInjector.image.name, and unless it's set, the traffic-manager will ask Ambassador Could for the preferred image.Upgrade to Helm version 3.8.1

The Telepresence client now uses Helm version 3.8.1 when auto-installing the Traffic Manager.

Remote mounts will now function correctly with custom securityContext

The bug causing permission problems when the Traffic Agent is in a Pod with a custom

securityContext has been fixed.Improved presentation of flags in CLI help

The help for commands that accept Kubernetes flags will now display those flags in a separate group.

Better termination of process parented by intercept

Occasionally an intercept will spawn a command using

-- on the command line, often in another console. When you use telepresence leave or telepresence quit while the intercept with the spawned command is still active, Telepresence will now terminate that the command because it's considered to be parented by the intercept that is being removed.Version 2.5.8 (April 27, 2022)

Folder creation on `telepresence login`

Fixed a bug where the telepresence config folder would not be created if the user ran

telepresence login before other commands.Version 2.5.7 (April 25, 2022)

RBAC requirements

A namespaced traffic-manager will no longer require cluster wide RBAC. Only Roles and RoleBindings are now used.

Windows DNS

The DNS recursion detector didn't work correctly on Windows, resulting in sporadic failures to resolve names that were resolved correctly at other times.

Session TTL and Reconnect

A telepresence session will now last for 24 hours after the user's last connectivity. If a session expires, the connector will automatically try to reconnect.

Version 2.5.6 (April 18, 2022)

Less Watchers

Telepresence agents watcher will now only watch namespaces that the user has accessed since the last

connect.More Efficient `gather-logs`

The

gather-logs command will no longer send any logs through gRPC.Version 2.5.5 (April 08, 2022)