Microservice Orchestration Best Practices

What is a Microservice in Simple Terms?

What is Microservice Orchestration?

Why is Microservice Orchestration Necessary?

Benefits of Microservice Orchestration

Microservice Orchestration Deployment Best Practices

2. Implement a Monitoring and Logging Service for Your Microservices

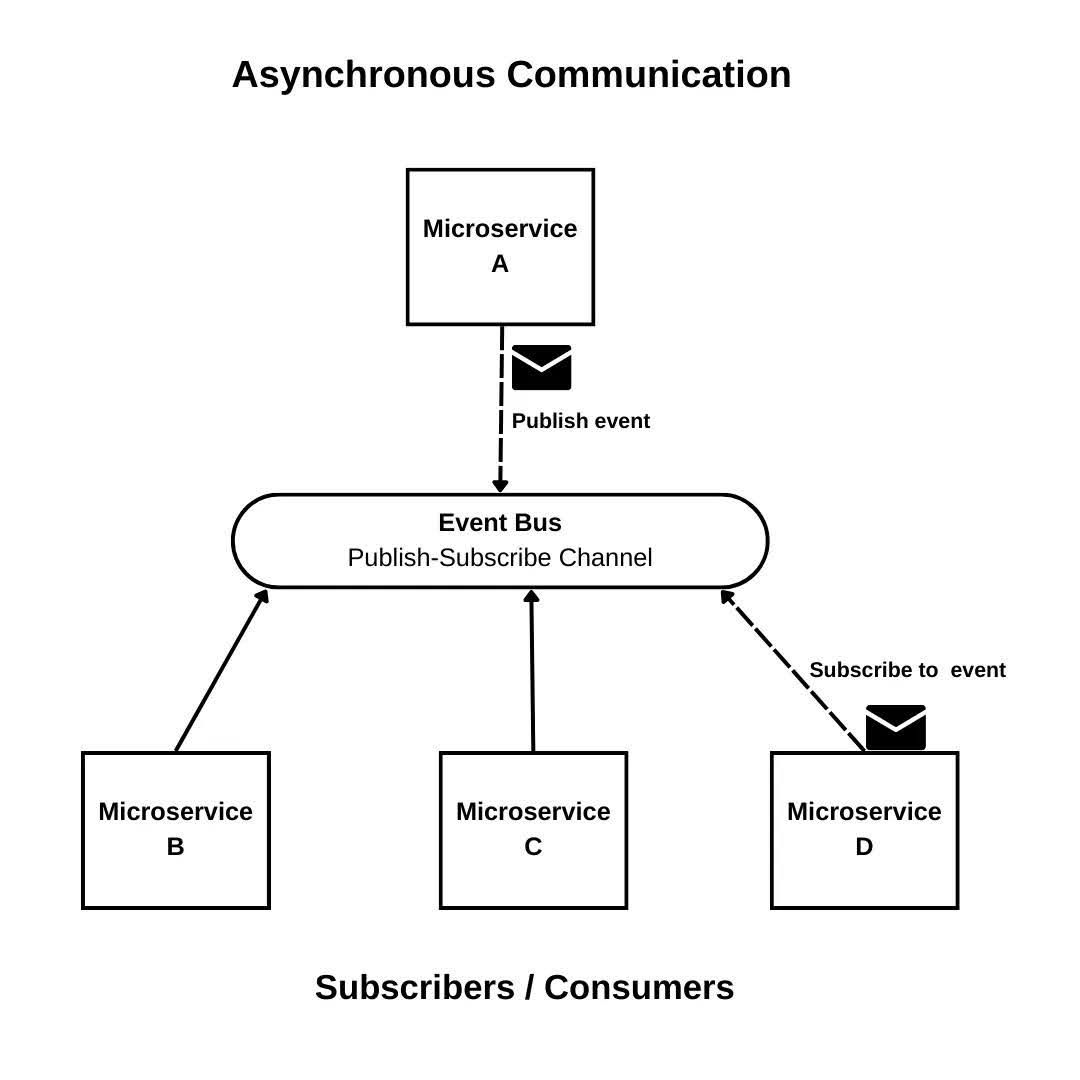

3. Use Asynchronous Communication

4. Separate your Microservice Data Storage

5. Implement Service Discovery

6. Use an API Gateway for Routing and Authentication

7. Use a Configuration Management System for Consistency

8. Design for Failure to Ensure Fault Tolerance and Resiliency

9. Use the Single Responsibility Principle (SRP)

Conclusion

When you think about it, we’ve made great strides in software development, but each step has brought new and exciting challenges. We started with big, clunky monolithic systems, then advanced to tinier pieces called microservices to promote greater flexibility, scalability, and resilience.

However, with great power comes great responsibility. Now, we have to manage these tiny microservices in our distributed systems. This is where microservice orchestration swoops in to save the day (Yes, I just did a Tobey-Andrew-Tom Spider-Man marathon! My web developer journey feels complete 🕺).

In this article, we will briefly look at what a microservice is, why microservice orchestration is essential, and then dive into nine microservice orchestration best practices that can make the deployment of microservices much smoother.

What is a Microservice in Simple Terms?

A microservice is a small, modular, and independently deployable component of a larger software application designed to perform a business-specific task or functionality. It communicates with other microservices through well-defined APIs or protocols, such as HTTP/REST or messaging systems.

Remember when we used to build big Lego castles with smaller Lego pieces? Each piece could have a specific function: a Lego door, window, or roof, and together they make up what we proudly show off as our castles filled with all the action our wildest imagination could conceive. Now imagine that castle as a complete architecture, with each Lego piece as a microservice.

Microservices are typically designed to be loosely coupled, meaning that they are not dependent on each other and can be changed or updated without affecting the rest of the application. They are usually organized around business capabilities, such as handling user authentication, processing orders, or managing inventory, rather than technical concerns like databases or servers.

What is Microservice Orchestration?

Microservice orchestration is the strategic process of integrating and managing microservices to deliver a cohesive application or service. This essential coordination covers the deployment, communication, and scaling of microservices within a distributed architecture, ensuring they operate smoothly and efficiently.

Why is Microservice Orchestration Necessary?

Consider the development of a ride-sharing app, which necessitates features like user authentication, ride requests, driver matching, payment processing, and ride tracking. Each feature is managed by a dedicated microservice. To guarantee a seamless user experience, efficient communication between these microservices is vital, necessitating effective orchestration.

Benefits of Microservice Orchestration

Orchestrating microservices offers several advantages, including:

- Enhanced Scalability: Easily scales individual components based on demand.

- Improved Fault Tolerance: Ensures the system remains operational even if one microservice fails.

- Increased Flexibility: Allows for quick updates and changes to specific features without disrupting the entire application.

- Fostered Collaboration: Promotes better integration and understanding among development teams.

- Streamlined Management: Simplifies the oversight of complex distributed systems.

Microservice Orchestration Deployment Best Practices

Deploying microservice orchestration can become increasingly complex and daunting as you scale the number of services and the architectural complexity. However, leveraging certain best practices can significantly ease this process, ensuring a seamless and efficient microservice deployment. Here, we delve into some of the most recommended best practices for microservice orchestration deployment:

1. Use Containers for Microservice Packaging and Deployment

Utilizing containers for packaging and deploying microservices is a widely recognized and recommended best practice within the software development community. Containers offer several key advantages that make them an ideal choice for microservice architectures, including:

- Isolation: Ensures that each microservice operates independently, reducing conflicts between services.

- Portability: Facilitates easy migration of services across different environments, from development through to production.

- Scalability and High Availability: Simplifies scaling services up or down based on demand and ensures services remain available.

- Enhanced Security: Provides robust security features such as network segmentation, secrets management, and container-specific isolation mechanisms.

By encapsulating each microservice in its own container complete with necessary dependencies, configurations, and code, developers can streamline the deployment process across various environments—be it development, staging, or production. This uniformity significantly eases the complexity of managing service deployments in heterogeneous environments.

Moreover, container orchestration platforms like Kubernetes and Docker Swarm play a pivotal role in optimizing resource utilization and automating numerous tasks associated with container management. These include the creation, configuration, and dynamic scaling of containers, which not only enhances performance but also minimizes the potential for human error, thereby facilitating a more efficient and error-free deployment process.

2. Implement a Monitoring and Logging Service for Your Microservices

Microservices generate a large amount of data and events, making it challenging to quickly identify issues.

Implementing monitoring and logging for microservices is essential to track their performance and health, crucial for maintaining the stability and reliability of the system. Monitoring allows you to identify performance bottlenecks and optimize resource allocation. Furthermore, by analyzing usage trends, you can make informed decisions about scaling your microservices.

For effective monitoring and logging, I recommend using tools such as Prometheus, Grafana, and the ELK stack, or other log aggregators and metrics collectors. These tools provide comprehensive capabilities to monitor and log microservice activities, ensuring your services run smoothly and efficiently.

3. Use Asynchronous Communication

Effective communication between microservices is essential for a cloud-native application to function effectively. The best approach to this is asynchronous communication. This type of communication allows microservices to send and receive information independently without having to wait for an immediate response from other microservices.

One simple but effective approach to asynchronous communication is to adopt the publish-subscribe pattern. In this approach, when an event of interest occurs, the producer—in this case, the microservice—publishes a record of that event to a message queue service. Any other microservices that are interested in that type of event can subscribe to the message queue service as a consumer of that event.

Using asynchronous communication and the publish-subscribe pattern, each microservice can operate independently and asynchronously, without waiting for responses from other microservices. This can help reduce the risk of communication breakdowns leading to system failures. Another best practice related to communication channels is to use standardized communication protocols and formats. This helps ensure compatibility between different microservices and makes it easier to integrate new microservices into the architecture.

4. Separate your Microservice Data Storage

Separating data storage is another best practice that can significantly impact microservice orchestration. This principle suggests that each microservice should have its own dedicated data store rather than sharing a common data store with other microservices.

By separating data storage in this manner, managing the data of each microservice becomes more straightforward, enabling them to be developed, deployed, and managed independently. It allows for greater flexibility in scaling individual microservices, as each can have its dedicated database instance. This approach can also help avoid dependencies between services and improve security by limiting data access.

5. Implement Service Discovery

As the number of microservices within an application increases, tracking which services are running and their locations can become challenging. This is where service discovery tools, such as an API gateway (e.g. Edge Stack), service mesh (e.g., Consul), or service registry (e.g., Eureka), become invaluable. These tools facilitate the location and communication with different services, eliminating the need to develop specific discovery logic for every programming language and framework utilized by the service clients..

6. Use an API Gateway for Routing and Authentication

An API gateway acts as a dedicated layer for managing both incoming and outgoing traffic to microservices, offering features such as routing, load balancing, and authentication. By utilizing an API gateway, microservice orchestration is simplified through a single entry point for all traffic. This setup not only enhances security but also improves service manageability. Furthermore, the inherent load balancing feature of most API gateways, such as the Edge Stack, ensures that microservices can handle large volumes of traffic, thereby maintaining high availability and scalability. Edge Stack API Gateway, in particular, excels at distributing traffic evenly across microservices..

7. Use a Configuration Management System for Consistency

Your microservice configuration is an important part of the entire architecture and will undergo many changes as you transition from staging to production, among other environments. A critical best practice to implement is the third principle of the Twelve-Factor App, which recommends that your app’s configurations be kept separate from the application code and stored as environment variables. This approach simplifies managing and deploying applications across different environments and infrastructures.

To achieve this effectively, a configuration management system is essential. For those unfamiliar, configuration management systems are tools that help manage the configuration of your infrastructure and applications, ensuring that microservices are deployed consistently and their configurations are kept up to date. They also facilitate easier rollback of changes, troubleshooting of issues, and enhanced security by keeping sensitive information—such as API keys or database credentials—out of the codebase and preventing accidental exposure.

8. Design for Failure to Ensure Fault Tolerance and Resiliency

Microservices should be designed to be fault-tolerant and resilient, enabling them to continue functioning even if one or more services fail. Implementing features like circuit breakers, retries, timeouts, graceful degradation, bulkheads, and redundancy will ensure this resilience. Let’s take a closer look at each of these features:

- Circuit Breakers: Used to protect against cascading failures. When a microservice fails, the circuit breaker opens, stopping the flow of traffic to that service, which allows the system to recover and avoid a complete failure.

- Retries: Handle temporary failures by allowing the client to retry the request after a short period, mitigating issues caused by transient errors.

- Timeouts: Prevent long-running requests from causing system issues by triggering a timeout when a request takes too long to complete, thereby preventing it from blocking other requests.

- Graceful Degradation: Involves reducing functionality in the event of a failure. For example, if a search functionality microservice fails, the application could still provide a reduced set of search results rather than failing completely.

- Bulkheads: Isolate failures by dividing the system into smaller sections, so failures in one section can be contained without affecting the entire system.

- Redundancy: Involves replicating microservices to ensure a backup is always available in the event of a failure, allowing the system to continue functioning with redundant microservices even if some services fail.

By implementing these practices, teams can ensure their microservices are resilient to failures and can continue to function despite unexpected issues.

9. Use the Single Responsibility Principle (SRP)

The Single Responsibility Principle (SRP) is a software design principle that states a class or module should have only one reason to change. While SRP is not directly related to microservice orchestration, it is still considered a best practice in software development.

In the context of microservices, SRP can be applied to ensure each microservice has a clear and specific responsibility, avoiding unnecessary dependencies on other microservices. This approach enables developers to create more cohesive and maintainable microservices. It simplifies the orchestration, deployment, and management of microservices, as each is designed with a clear purpose and a defined set of dependencies.

Conclusion

While microservice orchestration presents challenges, adhering to these best practices streamlines the process and facilitates a seamless deployment. Happy building!

Edge Stack API Gateway

Improve your microservices with Edge Stack API Gateway: Simplify routing, secure your services, and ensure seamless integration.